Watson for Patient Safety

company

IBM Watson Health

client

Celgene

role

Lead UX Researcher

year

2016-7

Challenge

In 2016, My team at IBM Watson Health were charged with developing a new, end-to-end product in the Drug Safety space, with anchor client Celgene. Our goal was to manage the hundreds of adverse event reports Celgene received daily to efficiently identify side effects, determine their severity and causality, package them for FDA reports, and learn from the data to improve their therapeutics.

My Role

As UX Research Lead, I led a team of four to define a product vision that used AI to meet the business' efficiency needs while being desirable to end users. We built trust between users and the AI system to turn an antiquated process into one that looked “like it was actually built by someone who knows [Safety], all of the white noise is removed,” as per our executive stakeholder.

Outcomes

Background

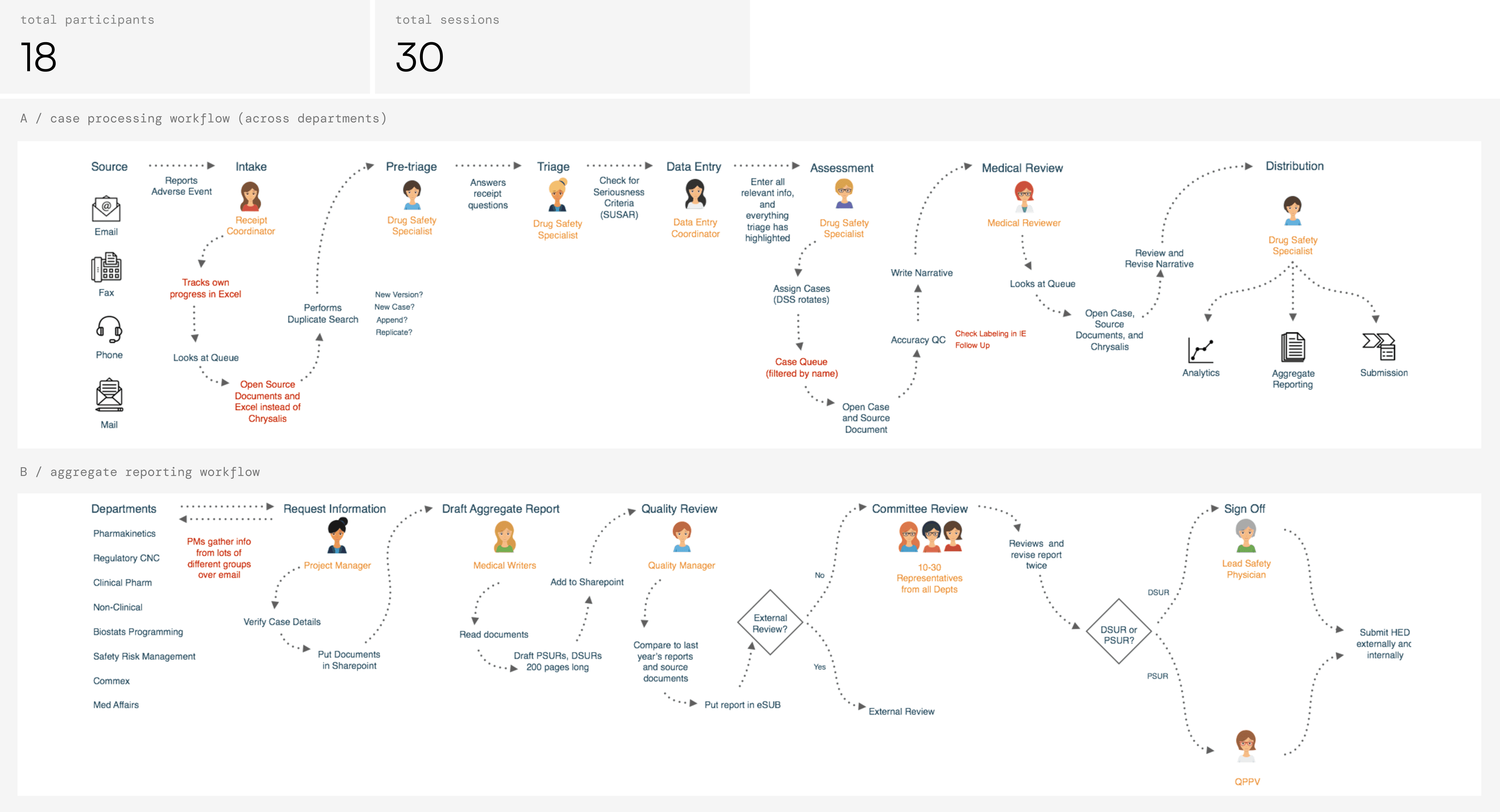

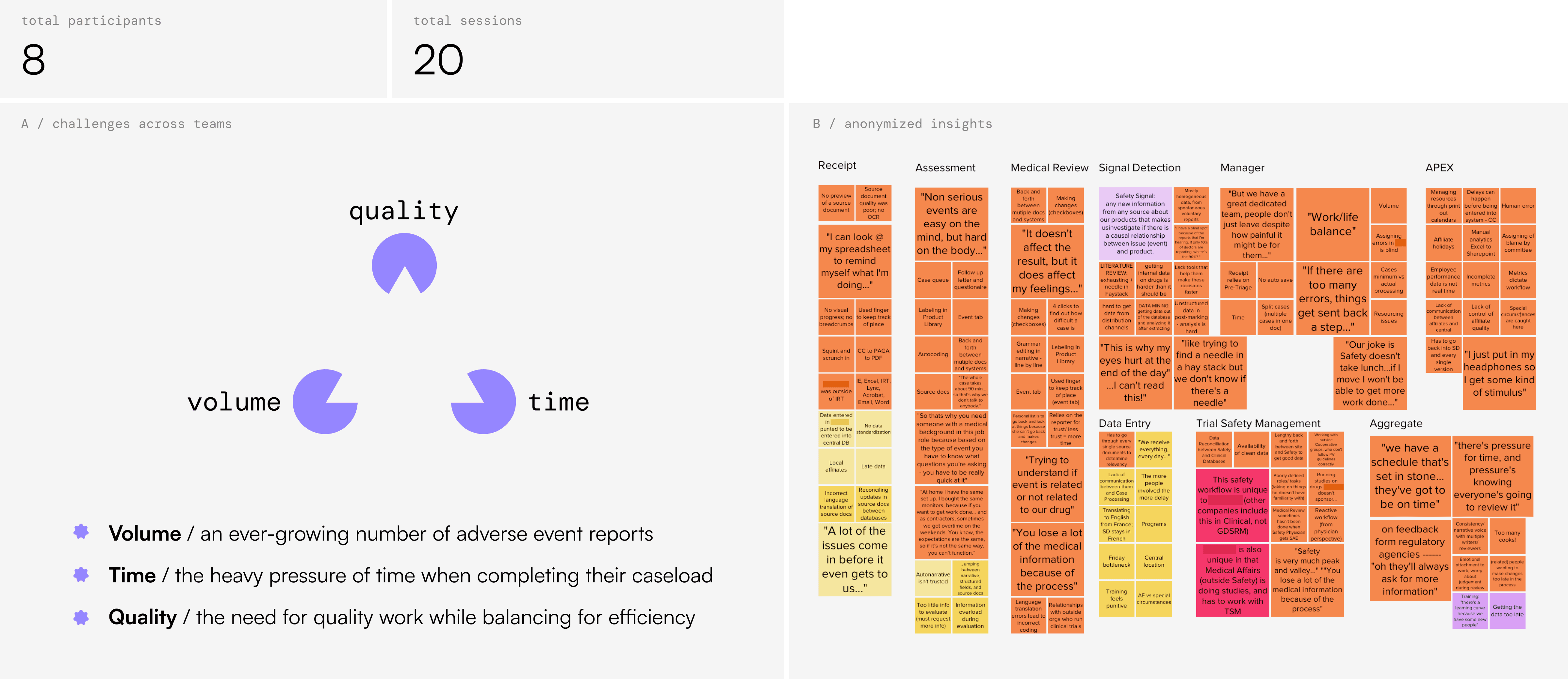

In 2016, IBM Consulting prototyped a blackbox AI solution for the Celgene Drug Safety; however, it was unclear whether the solution would actually make a good product. When my team joined the project, I first led a series of user interviews that revealed just how complex, labor intensive, and stressful Drug Safety was. An individual adverse event report went through seven different departments sequentially to be processed. Teams were pressured by their supervisors to work as fast as possible due to a never-ending stream of incoming reports, but mistakes could lead to regulatory backlash and missed safety signals.

Approach

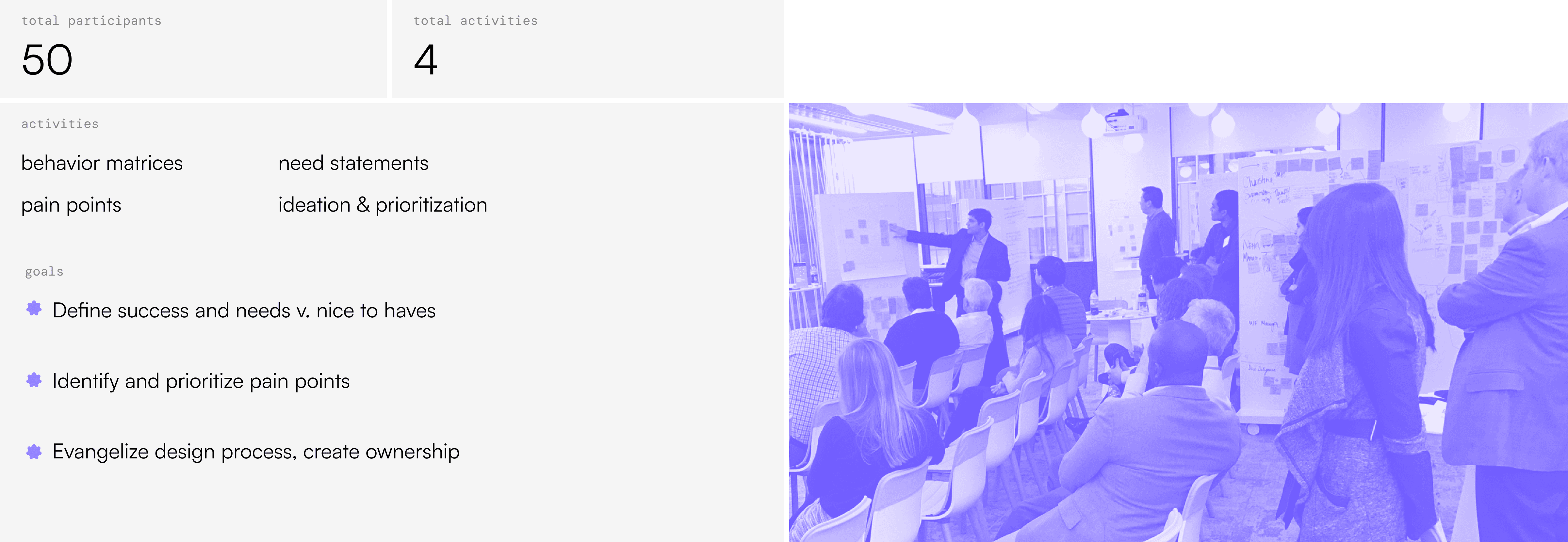

To figure out what would ease this pressure, I co-organized and led a design workshop and invited a diverse slice of Celgene to join us, from executive stakeholders to data entry ICs. The activities we ran provided a safe space for Safety staff to describe challenges they faced. Celgene's technology stack was antiquated — employees told us they needed Excel, not AI. The earliest stages of Drug Safety felt the most time pressure because they handled a constant stream of adverse event reports submitted to the company at any time, in any format. In addition, apprehension about AI was also quite high.

There was clear opportunity to use OCR + AI to streamline intake and data entry, but first, we needed to account for the AI apprehension we saw. I spent a week each month conducting onsite contextual inquiry across departments, to get a better sense of the realities of their work. This contextual inquiry was initially a tough sell — we built relationships across teams, and developed anonymization policies and other safeguards to protect the individuals we learned from.

"Our joke is, Safety doesn't take lunch," one Data Entry manager told us, who did her best to protect her team but found herself just as underwater as they were. Polarized screens on desktops and 'rearview mirrors' in cubicles gave context to a workforce under constant company observation to perform. And AI tools that IBM Consulting had built to reduce team burden were instead perceived as 'job destroyers.'

The fundamental barrier to adoption was a lack of trust, of both AI and the company. Conversation topics with employees ranged from fear of automation and job loss, to pride in helping people at work despite the stress. It was also apparent how much critical domain knowledge permeated the Safety team, from data entry to medical review staff. These findings dovetailed with a compliance ecosystem that couldn't use full AI automation due to FDA regulations. It was clear we needed to evolve the original blackbox AI prototype into a system that could address both human and regulatory concerns.

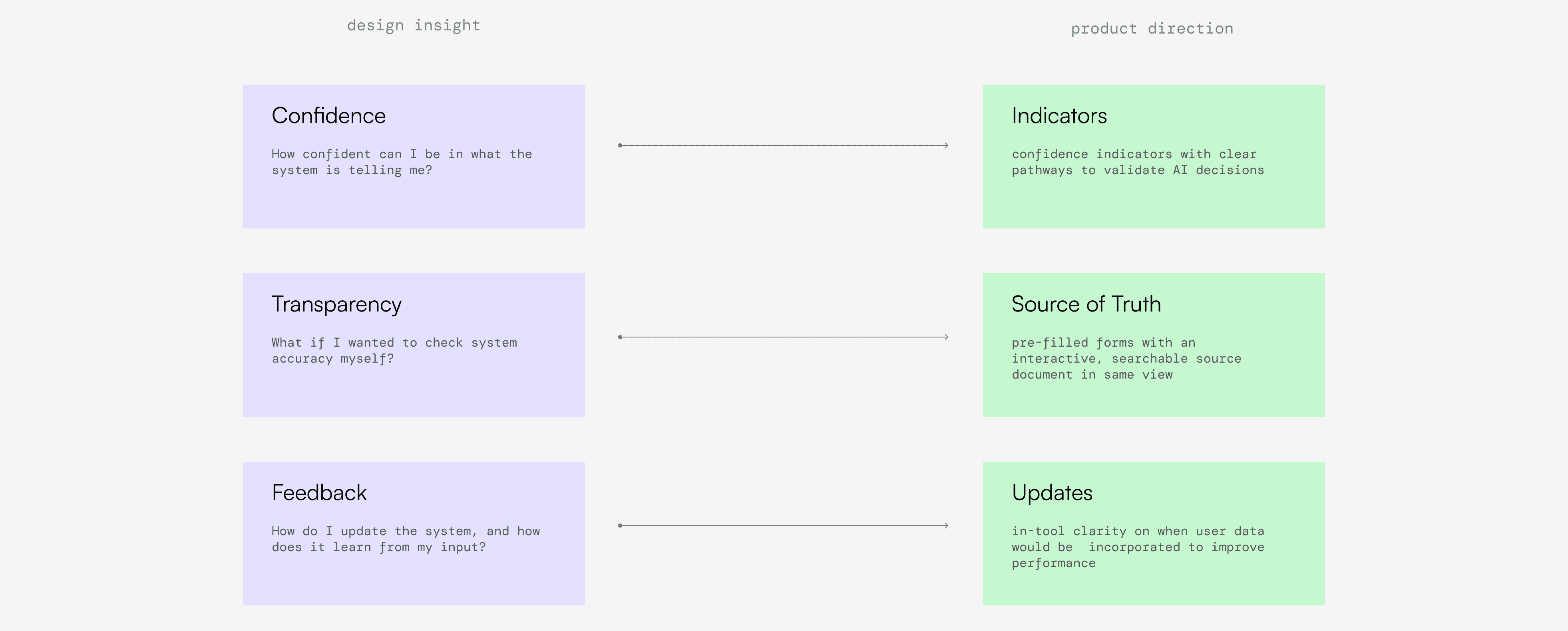

By workshopping a series of sacrificial concepts with users, we found that most participants were uncomfortable with AI that made choices for them without justification. However, there was high variation in baseline trust with AI. Some employees were skeptical or openly hostile to AI, while others said they'd default to "trusting the computer," and we needed to account for both. Regardless of skepticism, "how do I give this thing feedback" was the most common question we received. Safety staff knew the scientific and regulatory ecosystem changed regularly, and did not want to repeatedly teach the system the same lessons.

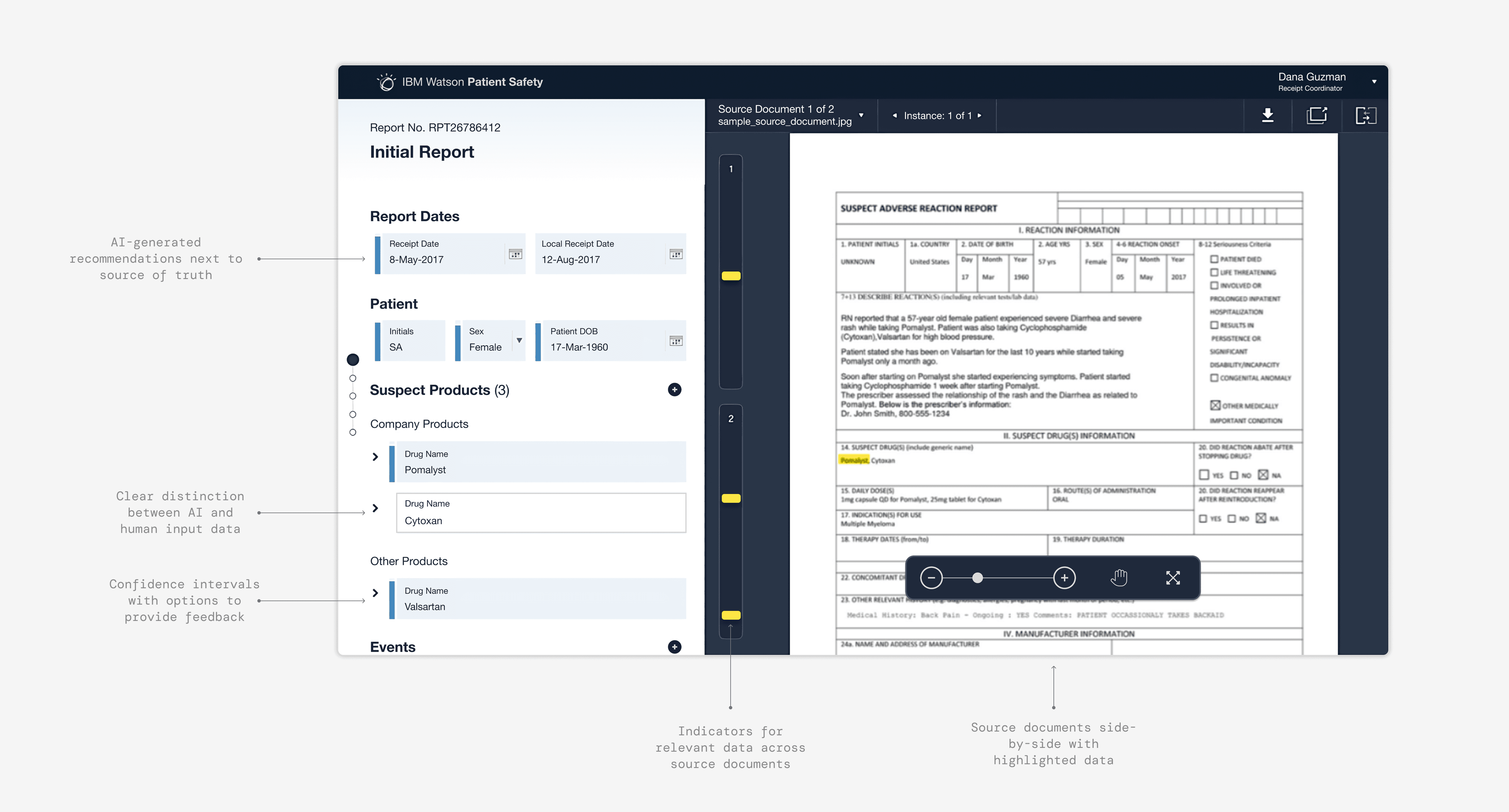

We arrived at an interface that had the relevant source of truth document always present alongside the workspace. We added confidence indicators that highlighted AI input and encouraged appropriate reliance on AI suggestions, and built flows that gave clarity on system updates the system. 25 rapid evaluative tests on our updated design showed a significant increase in trust and adoption, which demonstrated to Celgene leadership that these new capabilities were worthwhile.

Impact

We presented the final product vision to executives at multiple pharmaceutical companies and received very positive responses, one executive noting, “this looks like it was actually built by someone who knows [Safety], all of the white noise is removed.” This also bolstered the work as a generalized product, and not a bespoke solution for Celgene. With buy-in from the IBM's consulting and development teams, the project transitioned from design to implementation in 2017.

Learnings

The learnings about AI in complex, high-stakes situations like healthcare feel just as relevant today as they were during the project —

Because of UX research, our biggest design advocates were our executive stakeholders.

Clients will go to bat for you if you show them you understand their world.Jamming a new technology into a challenging ecosystem magnified skepticism and broke trust.

A diversity of perspectives (while protecting vulnerable users) is critical for a useable, trustworthy product.Prototypes without a rich understanding of our users reinforced preconceived notions.

We needed generative research with end users to develop a solution that would actually be adopted.

/ case studies